AWS Lambda Cold Starts

One of the potential downsides of using AWS lambda as a serverless technology is the issue of cold starts. When using lambda attached to an API gateway, with a user making http requests, the process of starting up the lambda and loading all the libraries into memory and executing the code can hurt the user experience. This is especially true with statically typed languages such as Java and C#.

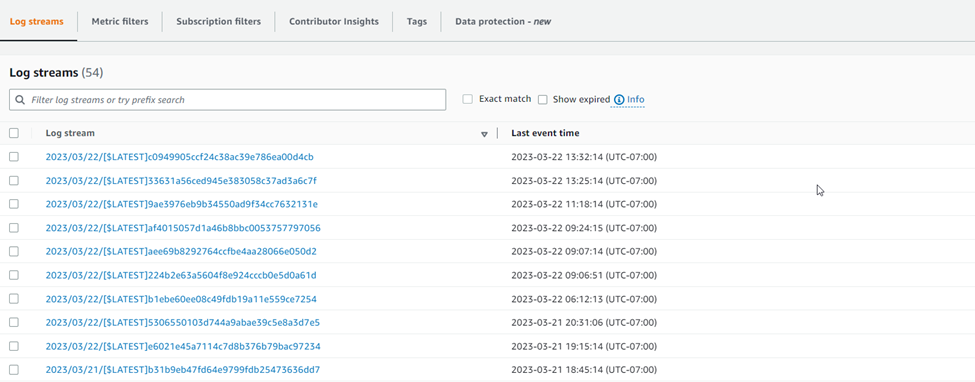

In .NET 6, the problem of cold starts has been worked on quite a bit, but it is still an issue. One way to get around the issue of a cold start is to always have a lambda “warm”, as in, not on disk not having been loaded. One way to keep a container warm is to ping the lambda every 1 minute or so, as if the lambda has not been used for 5 minutes, AWS destroys the container and it has to be brought back up again from scratch. In AWS Cloudwatch, this results in a new Cloudwatch entry, like so:

As you can see from the snapshot, each separate log stream entry in the logs is the result of another lambda cold start. To have the application to be as responsive as possible, there should be only one of these entries for the initial cold start, with only have one entry to click on for detail.

One possible method to help solve the cold start issue are to use lambda layers. A lambda layer is a set of libraries that you give AWS to manage for you, and AWS loads the libraries then when the lambda loads. Using this method, instead of bundling all sets of libraries within the lambda itself, reduces the cold start time somewhat.

To effectively eliminate the cold start problem altogether, however, one could use an always-on docker container in AWS ECS, or an AWS Fargate instance. Using these methods, instead of the keeping the lambda “warm” by triggering it every minute or so, may be more costly. In my next blog post I will attempt to calculate the costs associated with these two methods: keeping the lambda warm by invoking it every 1 minute, versus having a docker instance always running in AWS Fargate.